Processing massive medical image datasets can quickly turn your computer into a paperweight if you don’t handle memory properly.

Medical imaging files are notoriously large – a single CT scan can easily consume several gigabytes of RAM.

When you’re dealing with hundreds or thousands of these files, your system memory becomes the bottleneck that determines whether your analysis succeeds or crashes spectacularly.

The challenge is real. A typical hospital generates terabytes of medical imaging data monthly, and researchers often need to process entire patient cohorts simultaneously.

Without proper memory optimization techniques, you’ll find yourself constantly battling out-of-memory errors in the DICOM library and sluggish performance that makes data analysis practically impossible.

Why Medical Images Demand Special Memory Attention?

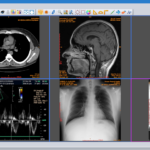

Medical images aren’t your typical JPEGs. DICOM files (Digital Imaging and Communications in Medicine) contain far more than just pixel data.

They include extensive metadata, multiple image slices, and often store data in uncompressed formats to preserve diagnostic quality.

Consider these typical file sizes:

- Chest X-ray: 8-50 MB per image

- CT scan: 100-500 MB per study (multiple slices)

- MRI scan: 200-1000 MB per study

- Whole slide pathology: 1-10 GB per image

When you load these files into memory for processing, the actual RAM consumption often exceeds the file size by 2-3x due to decompression and data structure overhead.

A DICOM library will typically load the entire image array into memory, which means a 500MB CT scan might consume 1.5GB of RAM once loaded.

Memory-Efficient Loading Strategies

Lazy loading represents your first line of defense against memory exhaustion. Instead of loading entire datasets upfront, you load images only when needed for processing.

This approach works particularly well when you’re applying the same operation across multiple files sequentially.

Here’s how different loading strategies compare:

| Loading Method | Memory Usage | Processing Speed | Best For |

| Load All Files | Very High | Fastest | Small datasets (<10GB) |

| Lazy Loading | Low | Moderate | Large sequential processing |

| Chunk Processing | Moderate | Good | Balanced workflows |

Streaming processing takes lazy loading further by processing images in smaller chunks or tiles. This technique proves especially valuable for whole slide pathology images that can reach 10GB in size.

You divide the image into manageable 1024×1024 pixel tiles and process each tile independently.

Memory mapping offers another powerful approach. Instead of loading the entire file into RAM, you map it to virtual memory and let the operating system handle data movement.

This technique works well when you need random access to different parts of large image files.

Smart Data Type Optimization

Medical images often store data in 16-bit or 32-bit formats, but many processing tasks don’t require this precision.

Converting to 8-bit representation can cut memory usage in half without significantly impacting analysis results for many applications.

The conversion process requires careful consideration of your data range. Medical images frequently use the full 16-bit range (0-65535) to represent different tissue densities.

When converting to 8-bit, you need to either normalize the data or clip extreme values intelligently.

Floating-point operations during image processing can temporarily double or triple memory consumption.

If you’re applying filters or mathematical transformations, consider working with integer data types when possible and only converting to float for specific calculations.

Batch Processing and Pipeline Design

Effective batch sizing balances memory usage with processing efficiency. Processing images one-by-one minimizes memory usage but introduces significant I/O overhead—processing too many simultaneously exhausts available RAM.

The optimal batch size depends on your available memory and average image size. A useful formula: Batch Size = (Available RAM × 0.7) / (Average Image Size × 3). The factor of 3 accounts for processing overhead and temporary variables.

Pipeline processing allows you to overlap I/O operations with computation. While one batch processes, the subsequent batch loads from disk. This approach maximizes hardware utilization without increasing peak memory consumption.

Consider implementing a producer-consumer pattern where one thread loads images into a queue while processing threads consume from that queue. This design prevents memory buildup while maintaining steady processing throughput.

Memory Monitoring and Cleanup

Active memory monitoring prevents crashes before they happen. Track both process memory usage and system-wide memory availability.

When memory usage exceeds safe thresholds (typically 80% of available RAM), implement cleanup strategies or reduce batch sizes.

Python’s garbage collector doesn’t always free memory immediately after processing large arrays.

Explicit cleanup using del statements and gc.collect() ensures memory returns to the system promptly. This practice becomes critical in long-running processing jobs.

Memory profiling tools help identify bottlenecks and leaks. Tools like memory_profiler for Python or Valgrind for C++ applications reveal exactly where your memory goes and highlight optimization opportunities.

Advanced Optimization Techniques

Compressed data structures can significantly reduce the memory footprint for sparse medical images. Many medical scans contain large regions of background (air or space) that compress extremely well.

Using compressed sparse formats can reduce memory usage by 70-90% for appropriate image types.

Multi-level processing handles images at different resolutions. Start analysis with low-resolution versions to identify regions of interest, then process only relevant areas at full resolution.

This approach proves remarkably effective for whole slide pathology and extensive radiology studies.

Consider external memory algorithms for truly massive datasets. These algorithms process data larger than available RAM by carefully orchestrating disk I/O operations.

While slower than in-memory processing, they enable analysis of datasets that would otherwise be impossible to handle.